该人脸68特征点检测的深度学习方法采用VGG作为原型进行改造(以下简称mini VGG),从数据集的准备,网络模型的构造以及最终的训练过程三个方面进行介绍,工程源码详见:Github链接

一、数据集的准备

1、数据集的采集

第一类是公共数据集:

人脸68特征点检测的数据集通常采用ibug数据集,官网地址为:

https://ibug.doc.ic.ac.uk/resources/facial-point-annotations/

其中同时包含图像和标注的有(有的数据集免费下载的只有标注没有图像):

300W,AFW,HELEN,LFPW,IBUG五个数据集。

如果需要做对于视频类图像的68特征点检测可以用下面300-VM数据集:

https://ibug.doc.ic.ac.uk/resources/300-VW/

上面数据集的介绍可以参考:https://yinguobing.com/facial-landmark-localization-by-deep-learning-data-and-algorithm/

第二类是自己标注的数据集:

这部分主要是用标注工具对自己收集到的图片进行标注,我采用自己的标注工具进行标注后,生成的是一个包含68点坐标位置的txt文档,之后要需要通过以下脚本将其转换成公共数据集中类似的pts文件的形式:

1 | from __future__ import division |

通过以上的整理过程,就可以将数据集整理成以下形式:

同时需要将以上数据集分成训练集和测试集两个部分。

2、数据集的预处理

准备好上面的五个数据集后,接下来就是对于数据集的一系列处理了,由于特征点的检测是基于检测框检测出来之后,将图像crop出只有人脸的部分,然后再进行特征点的检测过程(因为这样可以大量的减少图像中其他因素的干扰,将神经网络的功能聚焦到特征点检测的任务上面来),所以需要根据以上数据集中标注的特征点位置来裁剪出一个只有人脸的区域,用于神经网络的训练。

处理过程主要参考:

https://yinguobing.com/facial-landmark-localization-by-deep-learning-data-collate/

但是在图像进行预处理之后,特征点的位置同样也会发生变化,上面作者分享的代码在对图像进行处理之后没有将对应的特征点坐标进行处理,所以我将原始的代码进行改进,同时对特征点坐标和图像进行处理,并生成最终我们网络训练需要的label形式,代码如下:

1 | # -*- coding: utf-8 -*- |

3、数据增强

由于以上数据集总共加起来只有五千张左右,对于需要大数据训练的神经网络显然是不够的,所以这里考虑对上面的数据集进行数据增强的操作,由于项目的需要,所以主要是对原来的数据集进行旋转的数据增强。

以上的旋转主要可以分为两种策略:

1、将原始图像直接保持原始大小进行旋转

2、将原始图像旋转后将生成的图像的四个边向外扩充,使得生成的图像不会切掉原始图像的四个边。

主要分为±15°,±30°,±45°,±60°四种旋转类型,在进行数据增强的过程中,主要由三个问题需要解决:

(1)旋转后产生的黑色区域可能影响卷积学习特征

(2)利用以上产生的只有人脸的图像进行旋转可能将之前标注的特征点旋转到图像的外面,导致某些特征点损失

(3)旋转后特征点坐标的生成

针对第一个问题:

可以参考:https://blog.csdn.net/guyuealian/article/details/77993410中的方法,对图像的黑色区域利用其边缘值的二次插值来进行填充,但是上面的处理过程可能会产生一些奇怪的边缘效果,如下图所示:

有担心上面这些奇怪的特征是不是会影响最终卷积网络的学习结果,但是暂时还没有找到合适的解决方法,有大牛知道,感谢留言。

针对第三个问题:

可以参考:https://blog.csdn.net/songzitea/article/details/51043743中的解释来进行转换。

还有一篇写的比较好的博文可以阅读:https://charlesnord.github.io/2017/04/01/rotation/

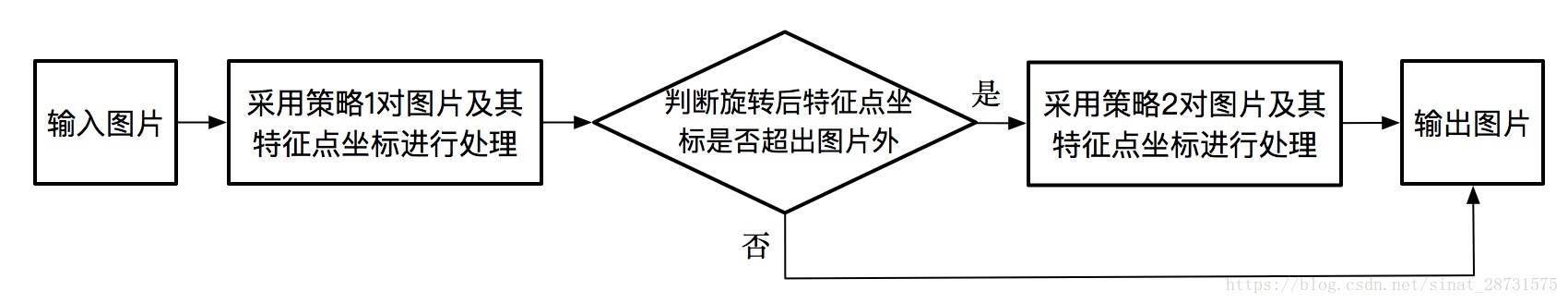

将以上问题一一解决之后,由于采用策略二进行旋转时会产生上面图片所示的大块奇怪的特征,但是策略一则不会产生那么大块的奇怪的特征,所以我对于旋转的数据增强的整体逻辑如下:

按照上面的处理过程,可以写出下面的代码:

1 | #-*- coding: UTF-8 -*- |

以上过程处理完成后,就完成了所有的数据预处理过程了。

二、网络模型的构造

由于caffe的图片输入层只是支持一个标签的输入,所以本文中的caffe的iamge data layer经过了一定程度的修改,使其可以接受136个label值的输入:

1 | #ifdef USE_OPENCV |

经过上面的修改之后,就可以编译该caffe。

网络结构以及solver.ptototxt见github中landmark_detec文件夹中的内容。

三、模型的训练

准备好了上面的所有数据以及文件之后,可以使用下面的shell脚本进行训练:

1 | #!/bin/sh |

训练过程中先开始使用”fixed”的策略进行训练,发现到20000次的迭代之后,loss不再下降了,所以改为”multistep”的策略进行训练,训练得到的模型效果还是很好的,时间在我的mac上面大概是80ms左右,可以使用下面的脚本进行测试:

1 | # coding=utf-8 |

在写上面的测试代码的时候发现mac上由于caffe的包和cv2的包会有冲突,所以img.show()显示图片会出现问题,希望有知道的大牛可以告诉我怎么解决这个问题。

以上就是全部的过程,也是我实习里做的第二个完整的项目,上面如果有错误或者说的不对的地方,希望大家能够留言指出,万分感谢。